Anyone can use the models to develop applications in generative, reasoning and physical AI, healthcare and manufacturing.

OpenAI’s open-weight text-reasoning large language models (LLMs) were trained on NVIDIA H100 GPUs and run inference best on the hundreds of millions of GPUs running the NVIDIA CUDA platform.

OpenAI’s open-weight text-reasoning large language models (LLMs) were trained on NVIDIA H100 GPUs and run inference best on the hundreds of millions of GPUs running the NVIDIA CUDA platform.

The models are now available as NVIDIA NIM microservices, offering deployment on any GPU-accelerated infrastructure with flexibility, data privacy and enterprise-grade security.

With software optimisations for the NVIDIA Blackwell platform, the models offer optimal inference on NVIDIA GB200 NVL72 systems, achieving 1.5 million tokens per second.

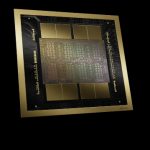

NVIDIA Blackwell (pictured) includes innovations such as NVFP4 4-bit precision, which enables high-accuracy inference while reducing power and memory requirements. This makes it possible to deploy trillion-parameter LLMs in real time.

NVIDIA CUDA lets users deploy and run AI models anywhere, from the NVIDIA DGX Cloud platform to NVIDIA GeForce RTX– and NVIDIA RTX PRO-powered PCs and workstations.

There are over 450 million NVIDIA CUDA downloads to date, and starting today, the massive community of CUDA developers gains access to these latest models, optimized to run on the NVIDIA technology stack they already use.

Demonstrating their commitment to open-sourcing software, OpenAI and NVIDIA have collaborated with top open framework providers to provide model optimizations for FlashInfer, Hugging Face, llama.cpp, Ollama and vLLM, in addition to NVIDIA Tensor-RT LLM and other libraries, so developers can build with their framework of choice.