The premise of Lumex is that AI is the primary workload for mobile and economics dictates that inference will be done at the edge.

“AI is no longer a feature, it’s the foundation of next-generation mobile and consumer technology,” said Arm svp Chris Bergey

The CPU C1 and the Mali GPU G1 are fastest of their breed ever designed by Arm though absolute performance figures weren’t quoted – only relative speed improvements compared to earlier cores.

The C1 cluster is said to deliver a 5x uplift in AI performance, 4.7x lower latency for speech-based workloads and 2.8x faster audio generation. C1 is said to have achieved double digit IPC growth.

There are four versions of C1: – C1 Ultra delivers a 25% performance increase from the Neoverse core; C1-Premium delivers similar, but not equal, performance to Ultra in a 35% smaller area; C1-Pro delivers 16% increase over Neocerse; C1-Nano reduces power consumption by up to 26%.

G1 is said to have 20% better performance than the Immortalis G925, 20% faster inference, 9% less energy per frame and a 2x improvement a ray tracing performance.

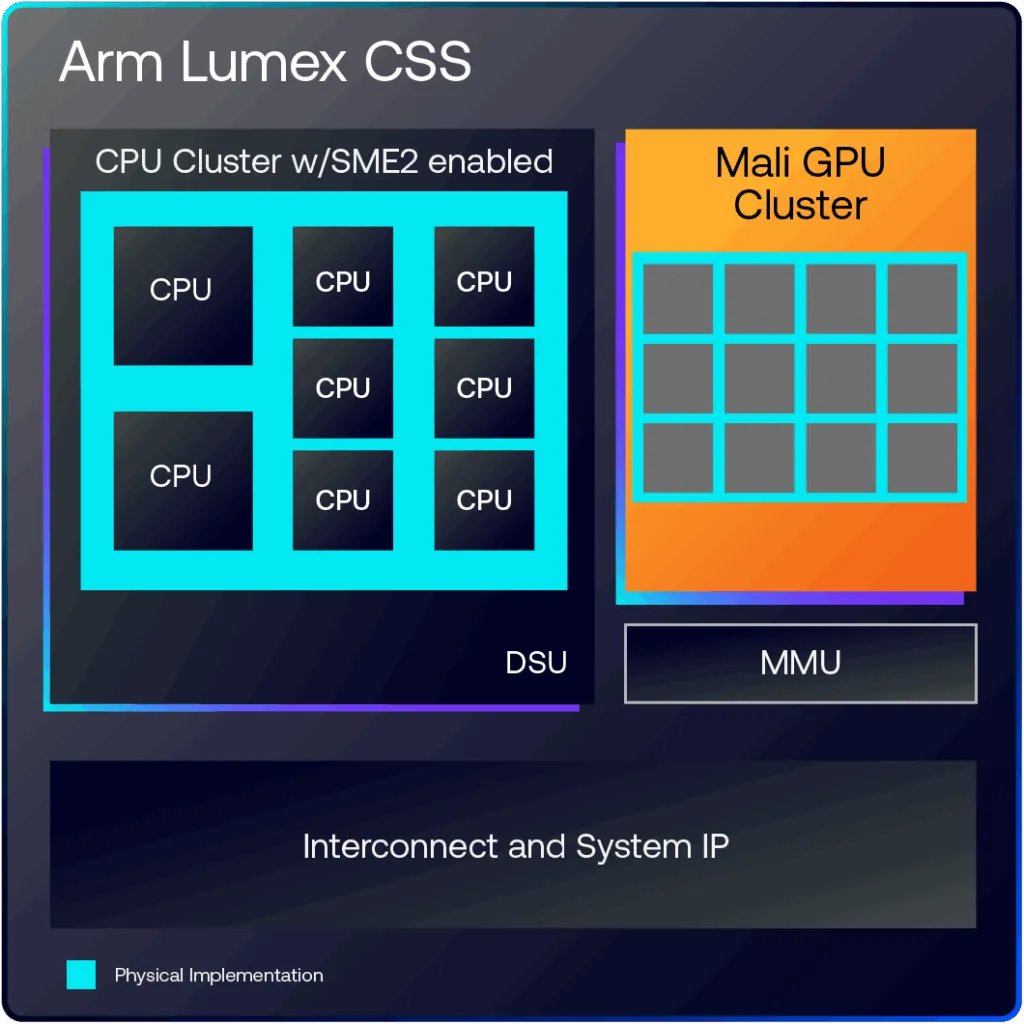

Arm has upgraded its cores to handle AI inference loads by introducing Scalable Matrix Extension (SME).

Arm’s KleidiAI software stack automatically takes advantage of SME/SME2 claiming to give developers up to 5x–12x performance improvements in LLMs and inference libraries with no code changes needed. SME 2 will be enabled across every Arm CPU platform.

Lumex is tape-out ready on TSMC 3nm.