It will democratise trillion parameter AI, promises Huang, to meet the 1.8 trillion parameters computing performance of accelerated computing.

It will democratise trillion parameter AI, promises Huang, to meet the 1.8 trillion parameters computing performance of accelerated computing.

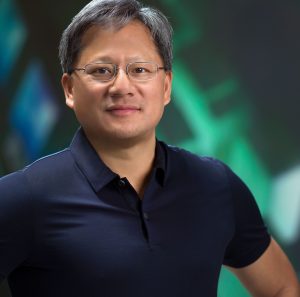

In the home of the San Jose Sharks ice hockey team, Jensen Huang took centre stage and announced the Blackwell platform: the AI superchip, with second generation transformer engine and the company’s fifth generation NVLINK high-speed GPU interconnect.

It is built to democratise generative AI which can operate at the trillion-parameter scale. Blackwell AI, named after mathematician and gaming theorist, David Blackwell, delivers 20 PFLOPS (FP4) or 10PFLOPS FP8 of AI performance on a single GPU. One of the design criteria is two reticle-sized die, which operate as a single unified CUDA GPU. This represents a new class of superchip for an holistic architecture, likened to connecting the right and left side of the brain, with no separation and without requiring programming changes. It also provides 192GB HBM3e and 8TBps HBM bandwidth and 1.18TBps NVLINK. For AI, it delivers four times the training, 30 times the inference and 25 times energy efficiency compared to the earlier generation, confirmed Huang.

It is built to democratise generative AI which can operate at the trillion-parameter scale. Blackwell AI, named after mathematician and gaming theorist, David Blackwell, delivers 20 PFLOPS (FP4) or 10PFLOPS FP8 of AI performance on a single GPU. One of the design criteria is two reticle-sized die, which operate as a single unified CUDA GPU. This represents a new class of superchip for an holistic architecture, likened to connecting the right and left side of the brain, with no separation and without requiring programming changes. It also provides 192GB HBM3e and 8TBps HBM bandwidth and 1.18TBps NVLINK. For AI, it delivers four times the training, 30 times the inference and 25 times energy efficiency compared to the earlier generation, confirmed Huang.

The fast memory is 192GB HBM3e. This combination means the ‘superchip’ can expand an AI datacentre scale to above 100k GPUs.

Another innovation, said Huang, is the ability to support the second generation transformer engine, also announced at GTC in San Jose this week. This is able to track every level of every tensor layer and adjust performance to accelerate throughput with intelligent 4-bit precision.