“Our algorithm leverages classical topological path planning and deep reinforcement learning,” according to the University’s Robotics and Perception Group.

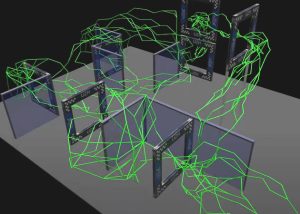

In the first step, (right) many collision free paths are found using a probablistic rodmap method.

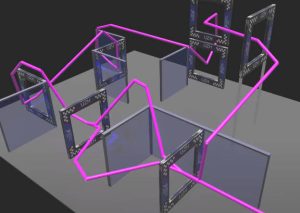

After this, the paths are filtered with different obstacle avoidance strategies (below left).

It is these paths, plus knowledge of the physial dynamic the real or modelled vehicle, that are used to guide the reinforcement learning algorithm, whose goal is to create a policy that maximises quadcopter progress along the chosen path while avoiding obstacles.

Reinforcement learning uses trial and error to optimise its parameters, and can handle non-linear dynamic systems.

“All this information is uesd by the neural network to compute a desired collective thrust,” according to the research team. “The policy is then trained, where it first learns to fly slowly around a track and avoid collisions. After this, the constraints on velocity and distance from the guiding path are removed and the policy learns to fly around the track in a minimum time.”

In two real-world experiments (right), the trained algorithm flew the drone around the course with thrust limited to 16N, or limited to 28N. Even in the contorted confined experimental course, it reached 42km/h and experienced accelerations up to 3.6g.

In two real-world experiments (right), the trained algorithm flew the drone around the course with thrust limited to 16N, or limited to 28N. Even in the contorted confined experimental course, it reached 42km/h and experienced accelerations up to 3.6g.

“We showed that the trained policy out performs the state-of-the-art algorithms in complex track layouts, and it is significantly more robust than classical planning tracking pipelines,” according to the team.

The paper ‘Learning minimum-time flight in cluttered environments‘ describes the research in IEEE Robotics and Automation Letters.

The Robotics and Perception Group pints to a pre-print of the paper here, and there is a video – from which the above images were taken.