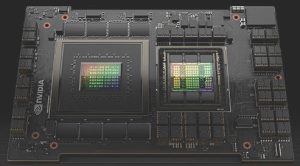

With up to 144 of the Arm v9 cores, Grace CPUs (right) are aimed at supercomputers and cloud computing and will run the company’s software stacks and platforms including RTX, HPC, AI and Omniverse.

With up to 144 of the Arm v9 cores, Grace CPUs (right) are aimed at supercomputers and cloud computing and will run the company’s software stacks and platforms including RTX, HPC, AI and Omniverse.

Error-corrected LPDDR5x memory is implemented, and total bandwidth is up to 1Tbyte/s.

Estimated top performance is >740 SPECrate 2017_int_base.

Adding to this, its Grace Hopper processor (left) will combine the company’s Grace and Hopper architectures to create a CPU+GPU coherent memory model for AI processing and HPC (supercomputing).

Adding to this, its Grace Hopper processor (left) will combine the company’s Grace and Hopper architectures to create a CPU+GPU coherent memory model for AI processing and HPC (supercomputing).

The processors above will use Nvidia’s NVLink-C2C 900Gbyte/s bidirectional interface internally, provide a unified cache-coherent memory address space for the CPU and GPU in the Grace Hopper case.

The interface is claimed to be 7x faster than PCIe Gen 5 and, compared to the company’s PCIe Gen 5 PHY, up to 25x more energy efficiency and 90x more area-efficiency. It is intended to be used on PCBs, within multi-chip modules, on silicon interposers and for wafer-level connections.

Further details of these processor are expected from Nvidia’s ceveloper conference next week.