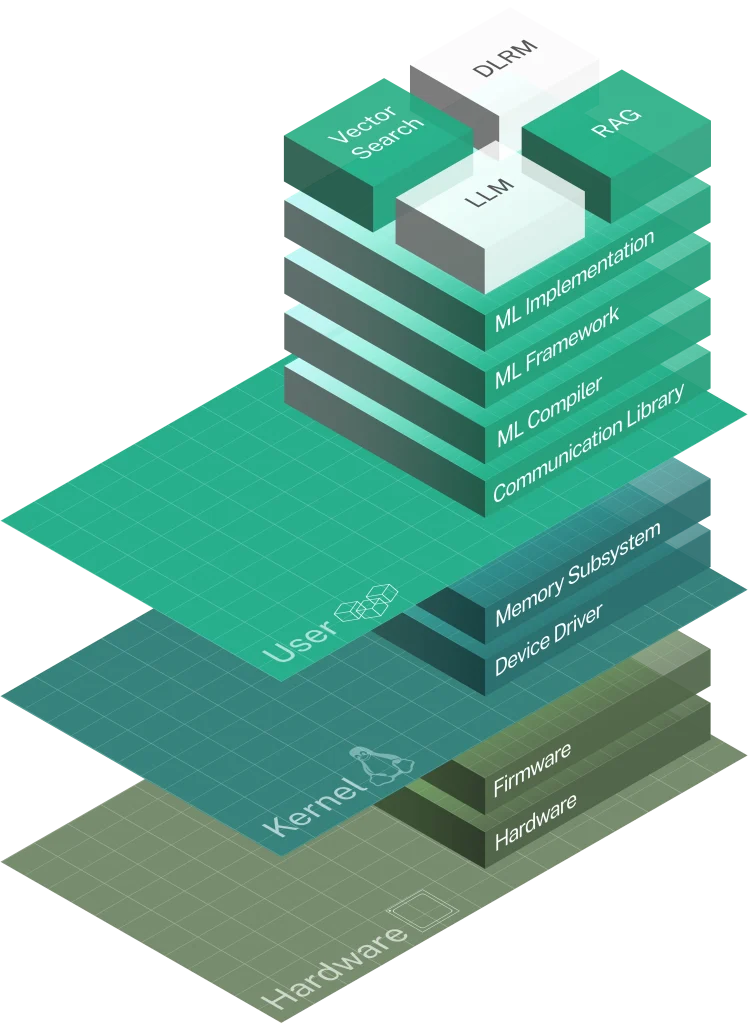

The line-up encompasses the entire technology stack required for AI infrastructure design, including hardware, silicon IPs, network topology, and software.

By delivering cross-stack optimization, Panmnesia’s Link Solution significantly reduces communication overhead and enhances overall system performance.

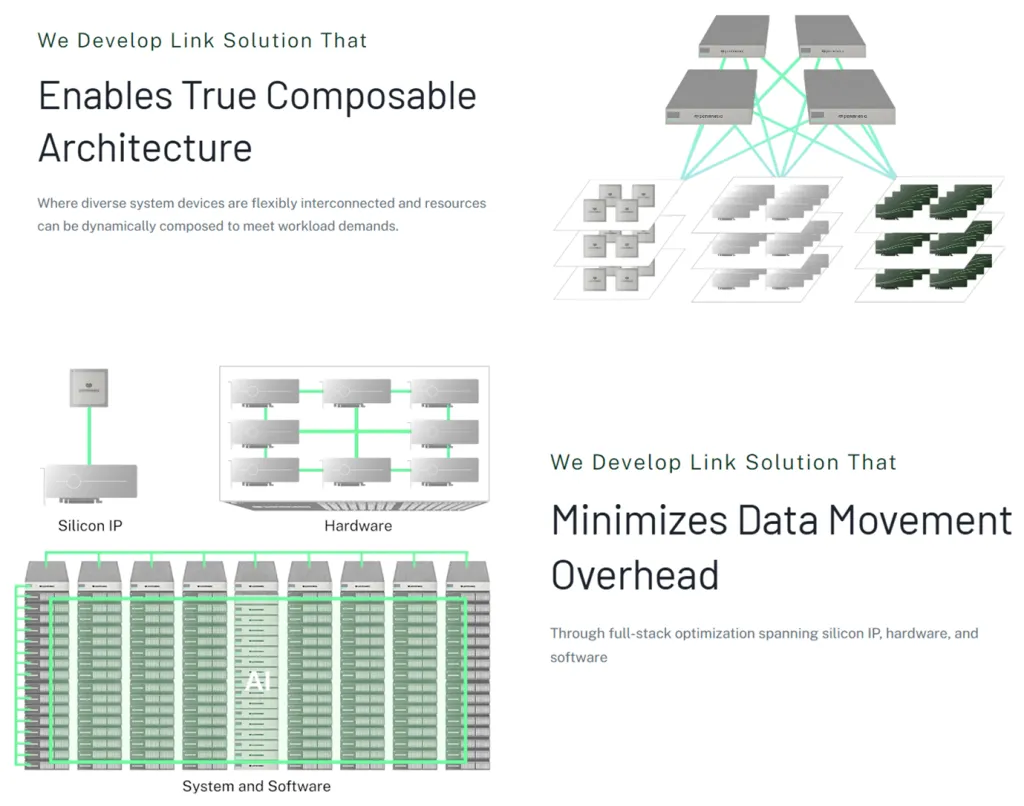

The Link Solution supports a wide range of connected devices and diverse interconnect methods, such as various network topologies and protocols.

This enables users to flexibly configure and interconnect resources, adapting to the dynamic requirements of rapidly evolving AI workloads.

Panmesia’s approach is to enable composable architectures allowing seamless addition, removal, and reconfiguration of diverse devices such as GPUs, AI accelerators, and memory modules, to meet the evolving needs of AI applications and by minimizing inter-device communication overhead.

To support this capability, Panmnesia is participating in various consortia as an official member, such as Compute Express Link (CXL) Consortium, UALink Consoritum, the Open Compute Project (OCP), and PCI-SIG.