Expanding upon previously demonstrated tech that enabled a robotic arm to uncover concealed objects tagged with radio frequency identification (RFID) tags, a team from the Massachusetts Institute of Technology (MIT) has enabled a robotic arm to retrieve hidden objects in a pile without the target object being tagged.

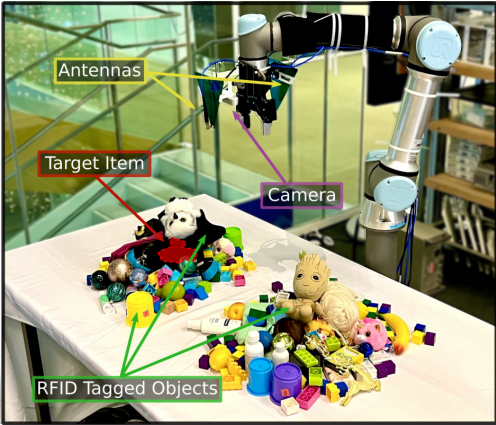

This is thanks in part to FuseBot, which is an algorithm that enables the system to reason about the likely location and orientation of objects hidden under a pile of other objects. According to the MIT team, the FuseBot efficiently removes obstructing objects — some of which feature RFID tags — to extract a target item in reportedly half the time it takes other similar robotics systems. Source: MIT

Source: MIT

“What this paper shows, for the first time, is that the mere presence of an RFID-tagged item in the environment makes it much easier for you to achieve other tasks in a more efficient manner. We were able to do this because we added multimodal reasoning to the system — FuseBot can reason about both vision and RF to understand a pile of items,” explained the researchers.

In addition to the FuseBot algorithm, the robotic arm also uses an attached video camera and RF antenna to recover an untagged target item from a mixed pile of products. According to the MIT team, the system scans the pile with the camera to produce a 3D model of the environment. Concurrently, the system sends signals from its antenna to find RFID tags. Such radio waves penetrate most solid surfaces, so the robot “sees” deep into the pile. Although the target item is untagged, FuseBot understands that the item will not be at the exact location as an RFID tag.

Meanwhile, the FuseBot algorithms combine, or fuse, this data, updating the 3D model of the environment and highlighting the possible locations of the untagged target item. Because the robot knows the size and shape of the untagged item, the system reasons about the objects in the pile as well as the locations of those items with RFID tags so that it understands the items that can be removed from the pile. This enables the system to find the target item with the fewest moves.

Once an object has been removed from the pile, the system scans the pile again and uses this information to update the model.

During trials of the technology, FuseBot outperformed systems that used vision only. The robotic arms were tasked with sorting through piles of household items, including office supplies, stuffed animals and clothing, of various shapes and sizes, featuring RFID-tagged items in each pile.

According to their findings, FuseBot successfully located and extracted the target item 95% of the time, while the vision only robotic system accomplished this 84% of the time. Additionally, the FuseBot located the target item using 40% fewer moves, and more than twice as fast.

The article, FuseBot: RF-Visual Mechanical Search, details the team’s findings.