By 2028, DRAM will constitute most of the CXL market revenue, with more than $12 billion market revenue by 2028.

Key players are Astera Labs, Montage, and Microchip.

High-performance, cost-attractive CXL memory expander controllers and CXL switches will be key for the mass adoption of CXL in the datacenter space.

Servers are currently grappling with memory performance challenges, and CXL deployment offers short- and long-term solutions.

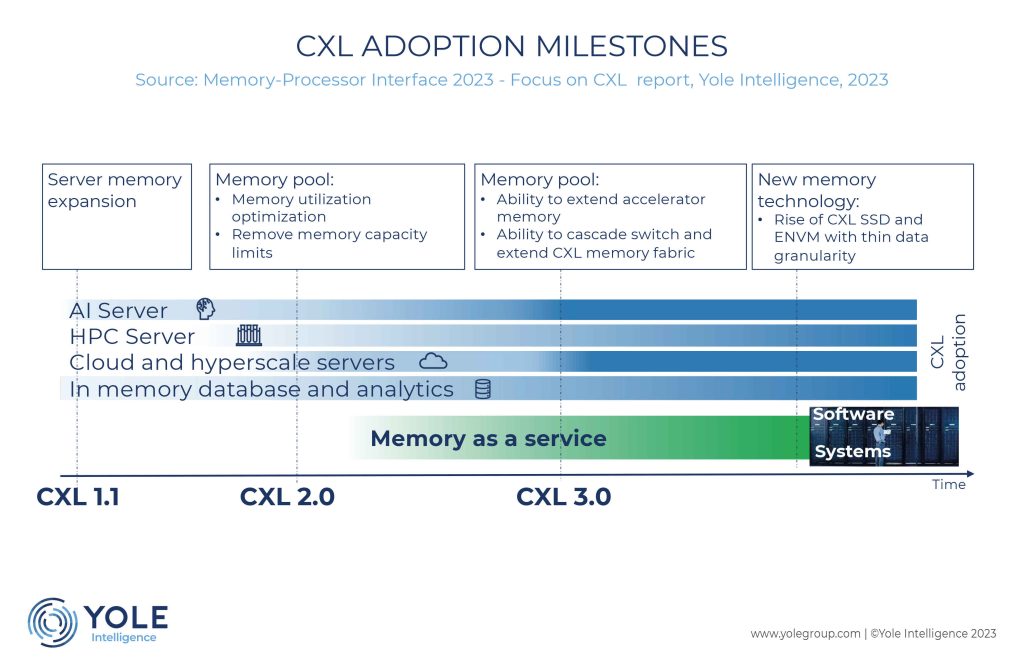

AI cloud servers, starting from CXL 1.1, can benefit from memory expansion, while CXL 3.0 has the potential to grant accelerators such as GPU s, DPU s, FPGA s, and ASIC s direct access to memory pools.

Cloud service providers and hyperscalers are anticipated to express significant interest in memory pooling, initiated by CXL 2.0 and composable servers with CXL 3.0.

Meanwhile, database servers will harness the capability to run larger in-memory databases for quicker analytics.

“CXL expansion takes two primary forms: add-In cards predominantly offered by expander controller providers like Astera Labs, Montage, and Microchip, and CXL drives promoted by memory IDM s such as Samsung, SK hynix, and Micron,” says Yole’s Thibault Grossi.

Astera Labs, Microchip, and Montage presently offer CXL expander controller chips, while CXL switches are being considered by numerous players including Enfabrica, Panmnesia, and Elastics.cloud, typically as embedded blocks within more complex chips.

Conversely, Xconn intends to provide stand-alone CXL switch chips. CXL has the potential to revolutionize memory utilization, management, and access, ushering in a new era of disaggregation and composability akin to the storage paradigm shift of the 90s and potentially spawning an industry focused on CXL memory fabric software, systems, and services.

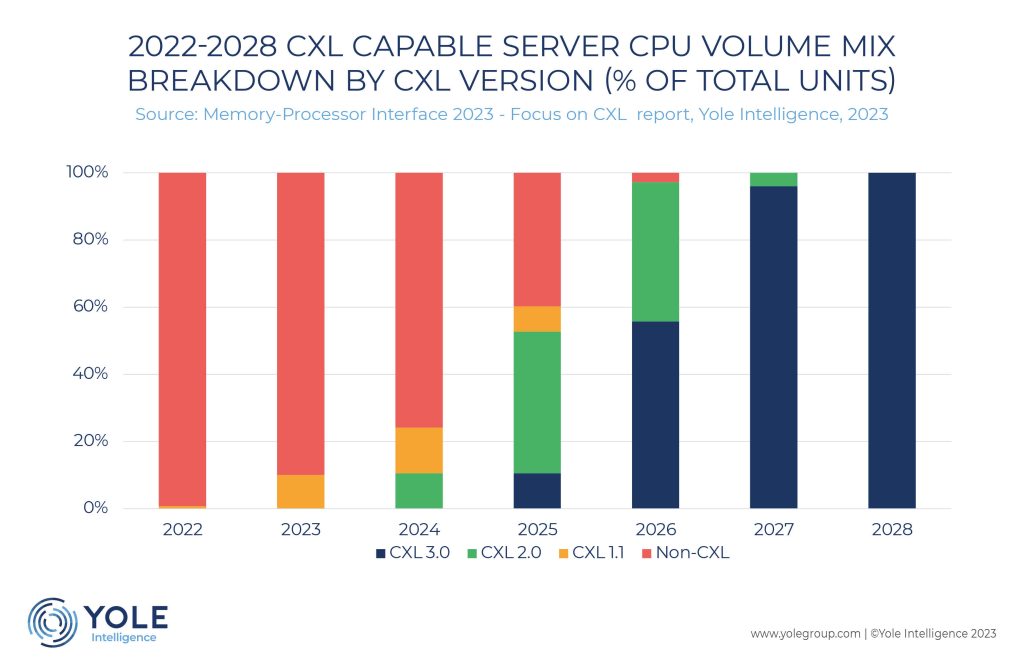

CXL operates atop the PCIe physical layer, with current CXL 1.1 (PCIe 5.0) enabling direct memory expander attachment. CXL 2.0 (PCIe 5.0) will introduce CXL switches, expanding memory pooling capabilities.

Meanwhile, CXL 3.0, leveraging PCIe 6.0, will enable cascading switches and peer-to-peer connections, facilitating full server disaggregation and composability.

The CXL standard encompasses three protocols (CXL.io, CXL.cache, and CXL.mem), enabling three distinct CXL device types. This report focuses on type-3 devices centered around memory expansion.

CXL, though media-agnostic, is expected to be primarily sought for memory expansion and pooling, predominantly utilizing DRAM memory expanders. These expanders can take the form of add-in cards equipped with DRAM DIMM s, or drives (EDSFF form factors) with embedded DRAM. Drives are poised to gradually supplant add-in cards due to their optimized form factors, compatibility with server chassis standards (critical for cooling), and ease of handling.

Finding the right balance between cost and capacity will be pivotal in determining when CXL drives will outpace add-in cards.

When it comes to the price of CXL memory expander controllers, their complexity is expected to result in costs exceeding US$60 in 2023 due to the emerging market and advanced manufacturing nodes. CXL switches, with their substantial lane configuration (x256 lanes) and reliance on aggressive technology nodes (such as 16nm FinFET for initial prototypes), are anticipated to be even more expensive.

However, pricing for both CXL controllers and switches is projected to normalize as CXL gains widespread deployment